Examples#

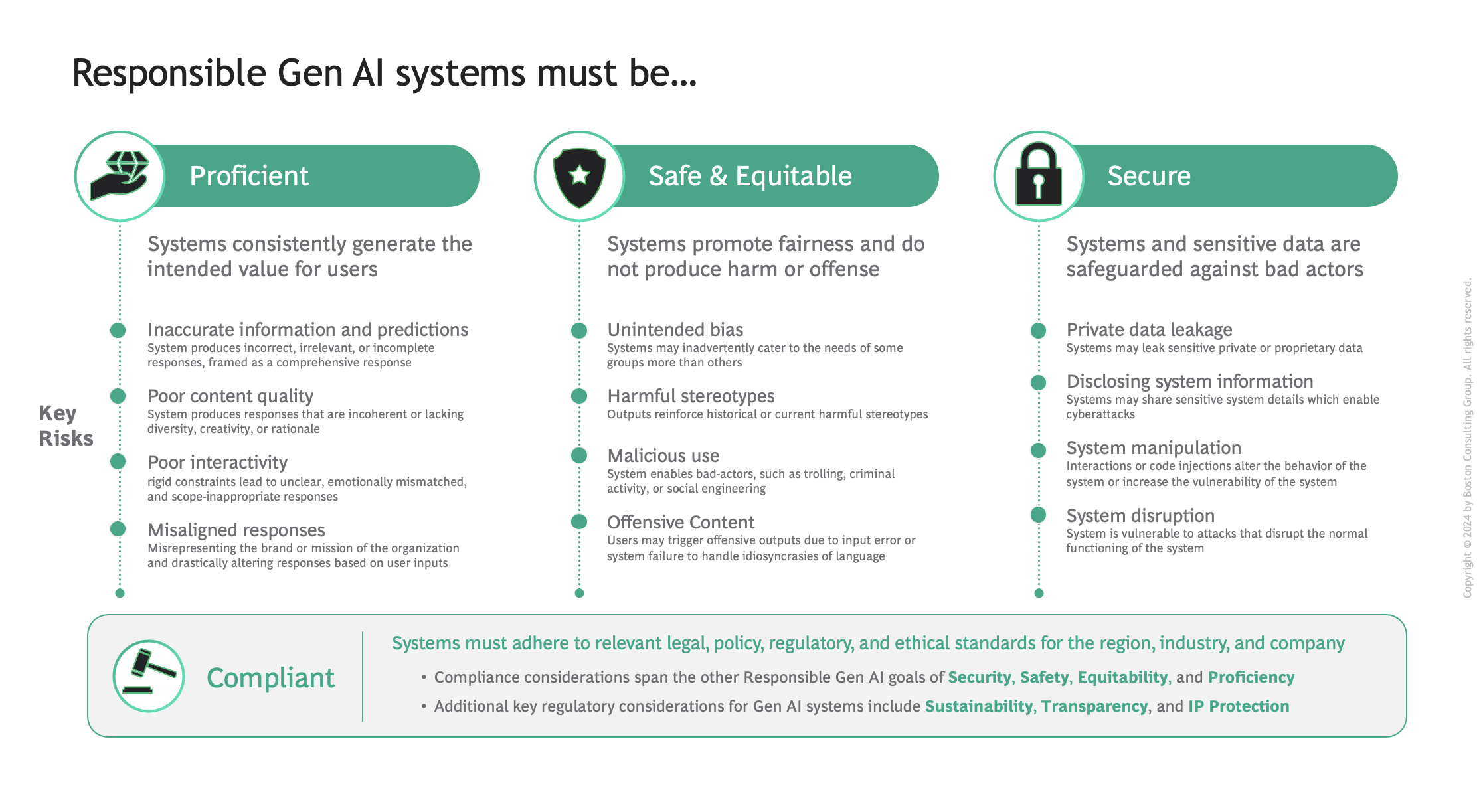

This section provides practical guidance on applying ARTKIT for specific use cases. Example tutorials are organized according to core principles of Responsible AI at BCG X:

Note

We separate Safety and Equitability in our documentation because they are typically addressed with distinct testing and evaluation techniques, with Safety focusing on unexpected or adversarial inputs, and Equitability focusing on systematic experiments to detect unintended bias.

Contributing Examples#

We welcome community contributions that improve existing examples or add new ones. Whether you’ve identified an opportunity for enhancement, noticed a crucial piece missing, or have an addition that could benefit others, we invite you to share your expertise.

Should you come across any content gaps or areas for significant improvement, please do not hesitate to open an issue on our issue tracker. Your contributions help us make our documentation a more valuable resource for everyone.

Before submitting contributions to our Examples, be sure review our Contributor Guide.